The main theme of our research is about living with technologies, whereby we explore techniques and applications of technologies in our natural living environment to support and improve our lifestyle. We hope to embed “normal” technologies in the environment (ambient intelligence) as well as using robots as an autonomous agent within our living environment. We would like to make robots to be able to live with human and support them effectively. By “normal” we try to keep the solution realistic to be able to deploy easily instead of requiring elaborated procedure and setup, or be limited to laborious experimental settings. For that, we are currently focusing related technologies on the fundamental capabilities of an autonomous robot to self-learn its knowledge and skills, as well as to dexterously maneuver itself among the humans. With smart devices or Internet of Things (IoT) becoming a “norm” in many modern houses, the integration and connection of the smart environment and robotics (cyber-physical systems) will open up more options to the solutions in real-world. In addition, being on the Borneo Island with the oldest rainforest in the world, we are finding interests in the applications of digital technologies in the natural habitat of the wildlife.

Self-Learning AI | Cyber-Physical Systems | Human Robot Interaction | Autonomous Vehicles | Robot Navigation and Control

Self-Learning AI

Keywords: AI, self-learning AI, autonomous AI, autonomous agents, self-supervised learning, unsupervised learning, knowledge discovery, autonomy

Cyber-Physical Systems

Keywords: Cyber-physical systems, internet of things, intelligent space, intelligent systems, smart systems

Human Robot Interaction

Keywords: Human robot interaction, human agent interaction, human machine interaction

Autonomous Vehicles

Keywords: Autonomous vehicles, autonomous cars, self-driving cars, intelligent cars, intelligent transportation

Robot Navigation and Control

Keywords: Robotics, robot navigation, robot localization, robot manipulation, robot control, simultaneous localization and mapping (SLAM)

|

Defense against Adversarial Attacks on Deep Convolutional Neural Networks

In this research, we explore techniques in defense against adversarial attacks on deep learning networks.

#self-learning-ai #adversarial-machine-learning |

|

Improving Egovehicle Control in Deep Learning training Pipeline for Autonomous Cars

In this project, we attempted to tweak the learning pipeline for autonomous car to improve the performance when using different egocars.

#autonomous-vehicles #autonomous-cars |

|

Addressing In-Domain and Out-of-Domain Separation in Open World Recognition

In this research, we probe into the research works in Open World Recognition that share similar goal to our works in self-learning AI.

#self-learning-ai #open-world-recognition #open-set-recognition |

|

Authentication for decentralized Cyber-Physical Systems

This work is motivated by the need for a suitable authentication mechanism for our proposed decentralized File-based Internet of Things (IoT) System. In this work, we are developing authentication mechanism for decentralized Cyber-Physical Systems (CPSs) by integrating block-chain technology including the Non-Fungible Token (NFT).

#cyber-physical-systems #internet-of-things #block-chain #nft |

|

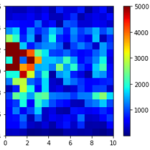

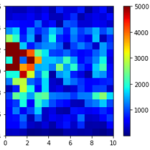

Automatic Deep Clustering based on Multi-Trial Vector-based Differential Evolution (MTDE)

In this work, we adapt the Multi-Trial Vector-based Differential Evolution (MTDE) in clustering algorithm.

#self-learning-ai #unsupervised #clustering |

|

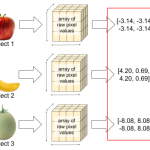

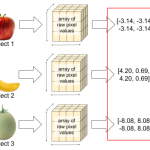

Self-supervised Objects Discovery in Images

In this research, we aim to develop an ability in intelligent systems to be able to distinguish one object from another object, or to discover different objects in its environment without being trained in supervised manner, i.e. without the labels. The ultimate goal is to enable an autonomous agent such as a personal robot to autonomously discover different objects in its environment from continuous observation of its surrounding through vision sensors.

#self-learning-ai #object-discovery #unsupervised #self-supervised |

|

Enhancing Odometry Estimation through Deep Learning-based Sensor Fusion

In this work, we explored ways to improve the accuracy and robustness of odometry estimation through multi-modal sensor fusion.

#robotics #robot-localization #robot-navigation #odometry #multimodal |

|

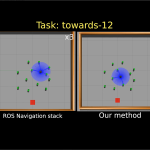

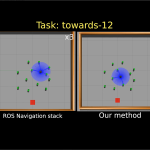

Socially Aware Reinforcement Learning-based Crowd Robot Navigation

In this research, we explore the use of Reinforcement Learning (RL), especially the Deep Reinforcement Learning (DRL) in robot navigation ion crowded environment. The ultimate goal is to improve the local and global planning of the navigation system through RL targeting the performance in crowded or complex environment where existing local and global planning techniques are not efficient.

#robotics #robot-navigation #social-robots-navigation #crowd-navigation |

|

Place Recognition with Biosonar

We are exploring the use of bat inspired biosonar echolocation signals in place recognition.

#robotics #robot-localization #place-recognition |

|

Robot Guides

This will be a series of projects leading to the development of a robot guide (“guide” as in the context of tour “guide”) software system that can be adapted for deployment in various venues such as shopping malls, museums, airport, etc.

#robotics #robot-localization #robot-navigation #service-robot |

|

Implementation of Deep Learning Algorithms for Self-Driving Cars

This is our initial attempt to learn about autonomous driving. We did a few final year projects in trying to convert a toy car to a self-driving car.

#autonomous-vehicles #autonomous-cars |

|

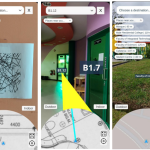

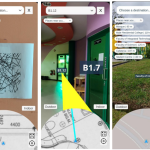

AR-based Indoor and Outdoor Navigation

In this project, we are developing an AR-based indoor navigator mobile app to guide a user to navigate from any location to a desired destination inside a building. The ultimate goal is to apply the indoor positioning technique in mobile robots. The project started with developing an AR-based outdoor navigator application for UBD campus and we have extended the application with indoor navigator capability.

#robotics #robot-localization #robot-navigation #indoor-navigation #augmented-reality #mobile-app |

|

File-based Internet of Things (IoT) System

In this work, we are developing a new form of IoT implementation where the connectivity is achieved by leverage non-IoT cloud services that are widely used by general public such as OneDrive, DropBox and Google Drive. For the moment, we have called this a file-based IoT system (FIoT).

#cyber-physical-systems #internet-of-things #file-based-iot |

|

ROS-based Robot Navigation on Eddie Robot

In this project, we implemented the ROS navigation stack on the Eddie robot platform. We replaced the controller with a Raspberry Pi and a laptop. We learned to setup the Robot Operating System (ROS), implement the navigation stack, configure and write the necessary transformation, odometry and motor control nodes specific to the Eddie robot.

#robotics #robot-localization #robot-navigation #slam |

|

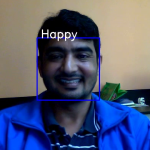

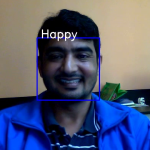

Multimodal Human Intention Perception for Human Robot Interaction

One of the key abilities for effective human-robot interaction is the understanding of human intention by the robots. In this project, we focus on recognition of human facial and body expressions from multimodal input signals, i.e. visual and audio.

#human-robot-interaction #human-emotion-recognition #face-detection #multimodal |

|

Implementation of SLAM on iRobot Create Robot (non-ROS)

In this project, we implemented the Simultaneous Localization and Mapping (SLAM) on an iRobot Create robot, the Wanderer-v1. The implementation uses Python. The SLAM was implemented from scratch involving implementation of the sensor model, motion model and the localization using particle filter. The implementation is a FastSLAM. In addition, A-star search has been implemented for path planning. The robot is able to navigate to a given location while performing SLAM and avoiding obstacles.

#robotics #robot-localization #robot-navigation #slam |

|

Unsupervised Human Activities Recognition

We are developing a framework for autonomous HAR suitable in our natural living environment, i.e. the sensor-less homes. The framework uses unsupervised learning approach to enable a robot, acting as a mobile sensor hub, to autonomously collect data and learn the different human activities without requiring manual (human) labeling of the data.

#self-learning-ai #human-activities-analysis #human-activities-discovery #human-activities-recognition #unsupervised #self-supervised |

|

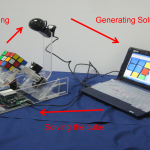

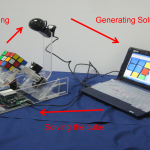

Rubik’s Cube Solving Robot

In this project, we constructed a robotic gripper to solve a 3×3 Rubik’s cube. The robot uses a webcam to detect the faces of the Rubik’s cube and a Basic Stamp microcontroller to control the movement of the gripper. A* search algorithm is implemented on a laptop that connects to the Basic Stamp microcontroller.

#robotics #robot-manipulation #robot-control |

|

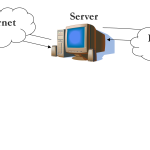

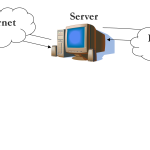

Robot Control Over Internet

In this project, we learned to remotely control a LEGO Mindstorms robot over the internet to provide remote surveillance with live cam feedback.

#robotics #robot-control #telepresence |