Human Activities Recognition (HAR) is an important component in assistive technologies; however, we have not seen wide adoption of HAR technologies in our homes. Two main hurdles to the wide adoption of HAR technologies in our homes are the expensive infrastructure requirement and the use of supervised learning in the HAR technologies. Many HAR research works have been carried out assuming an environment embedded with sensors. In addition, the majority of HAR technologies use supervised approaches, where there are labeled data to train the expert system. In reality, our natural living environment are not embedded with sensors. Labeled data are not available in our natural living environment. We are developing a framework for autonomous HAR suitable in our natural living environment, i.e. the sensor-less homes. The framework uses unsupervised learning approach to enable a robot, acting as a mobile sensor hub, to autonomously collect data and learn the different human activities without requiring manual (human) labeling of the data.

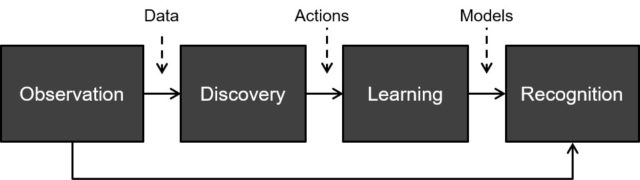

To develop a system of autonomous human activities recognition, we have proposed a pipeline with different processes in a broader perspective of human activity analysis.

The different stages apply different machine learning approaches including supervised and unsupervised. The learning and recognition stages have been extensively studied in HAR research community and have mainly applied supervised learning techniques. On the other hand, the discovery stage is a much less studied problem. The discovery stage attempts to differentiate or group different actions or activities. This resembles the ability of a child in knowing that one action is different or similar to another, despite not knowing what the actions are, i.e. without labels or without being told. If the discovery stage can successfully group different activities into their respective groups, these groups can be fed to the subsequent stage to learn a model for each group, i.e. each activity, with pseudo-labels. Subsequently, human-robot interaction can convert the pseudo-labels to linguistic labels depending on the language of the human interacting with the robot. This resembles young children interacting with adults or older children to label the models of the activities they have formed or learned through observation.

Our initial works are focused in solving the different problems in the discovery stage, i.e. human activities discovery.

We believe this is an important ability of any intelligent system to be able to self-learn. Recently, this type of machine learning has been referred as self-supervised learning. The concepts and techniques developed in the discovery stage will be applicable in other domains such as object recognition.

Latent features for Human Activities Discovery

This is the Raziq’s MSc work. We continue our development of latent features for human activities discovery.

People

- Muhammad Amirul Raziq bin Haji Rosman

- Ong Wee Hong

- Owais Ahmed Malik

- Daphne Teck Ching Lai

Data/Codes

Publications

Media

Application of Multi-Objective Evolutionary Computation in Human Activities Discovery

This is the PhD work of Parham. In this work, we explore the application of multi-objective evolutionary algorithms to solve the problem of human activities discovery (HAD). In particular, we improved the different stages in HAD by integrating various AI and computation techniques in the feature extraction and clustering stages.

People

- Parham Hadikhani

- Daphne Teck Ching Lai (Main Supervisor)

- Ong Wee Hong

Data/Codes

Publications

- Parham Hadikhani, Daphne Teck Ching Lai, Wee-Hong Ong, Flexible multi-objective particle swarm optimization clustering with game theory to address human activity discovery fully unsupervised, Image and Vision Computing, Volume 145, 2024, ISSN 0262-8856, https://doi.org/10.1016/j.imavis.2024.104985. (pdf)

- P. Hadikhani, D. T. C. Lai and W. -H. Ong, “Human Activity Discovery with Automatic Multi-Objective Particle Swarm Optimization Clustering with Gaussian Mutation and Game Theory,” in IEEE Transactions on Multimedia, doi: 10.1109/TMM.2023.3266603. (pdf)

- P. Hadikhani, D. T. C. Lai and W. -H. Ong, “A Novel Skeleton-Based Human Activity Discovery Using Particle Swarm Optimization With Gaussian Mutation,” in IEEE Transactions on Human-Machine Systems, vol. 53, no. 3, pp. 538-548, June 2023, doi: 10.1109/THMS.2023.3269047. (pdf, supp)

Media

Investigation of Features and Unsupervised Learning Approach for Human Activities Discovery

This is the MSc work of Amran. In this work, we investigated the effectiveness of different features and clustering algorithms in human activities discovery.

People

- Md Amran Hossen

- Farrah Koh Yen Swan

- Ong Wee Hong

Data/Codes

Publications

- Hossen, M.A., Hong, O.W., Caesarendra, W. (2022). “Investigation of the Unsupervised Machine Learning Techniques for Human Activity Discovery“. In: Triwiyanto, T., Rizal, A., Caesarendra, W. (eds) Proceedings of the 2nd International Conference on Electronics, Biomedical Engineering, and Health Informatics. Lecture Notes in Electrical Engineering, vol 898. Springer, Singapore. https://doi.org/10.1007/978-981-19-1804-9_38 (pdf)

Media

Autonomous Learning and Recognition of Human Actions based on An Incremental Approach of Clustering

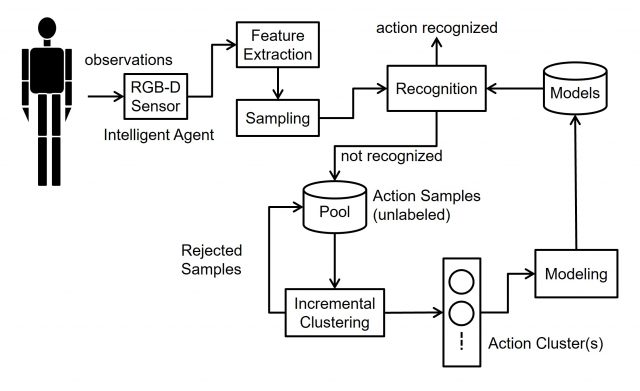

In this work, the initial concept of human activities discovery was developed. We proposed a framework or the concept of an autonomous or self-learning system that begin from observations of human activities to learning the models of the (new and unknown) activities discovered to recognition of activities. The discovery and learning of activities were done without requiring labels. The proposed framework can be generalized as a self-learning system for other types of recognition, e.g. object recognition.

People

- Ong Wee Hong

- Leon Palafox (Collaborator)

- Takafumi Koseki (Supervisor)

Data/Codes

Publications

- W. Ong, L. Palafox, T. Koseki, “Autonomous Learning and Recognition of Human Action based on An Incremental Approach of Clustering”, IEEJ Transactions on Electronics, Information and Systems, Vol 135, No.9, 2015 (pdf)

- W. Ong, L. Palafox, T. Koseki, “An Incremental Approach of Clustering for Human Activity Discovery”, IEEJ Transactions on Electronics, Information and Systems, Vol.134, No.11, 2014 (pdf)

- W. Ong, T. Koseki, L. Palafox, “An Unsupervised Approach for Human Activity Detection and Recognition”, International Journal of Simulation: Systems, Science and Technology (IJSSST), Vol.14, No.5, 2013 (pdf)

- W. Ong, T. Koseki, L. Palafox, “Investigation of Cluster Validity Indices for Unsupervised Human Activity Discovery,” in Proceedings of The 2013 International Conference on Artificial Intelligence (ICAI’13), Vol.1, pp 315-321, 22-25 July 2013 (pdf)

- W. Ong, T. Koseki, L. Palafox, “Unsupervised Human Activity Detection with Skeleton Data from RGB-D Sensor,” in Proceedings of The Fifth International Conference on Computational Intelligence, Communication Systems and Networks (CICSyN), pp.30-35, 5-7 June 2013 (pdf)

- W. Ong, and T. Koseki, “Unsupervised Activity Detection Based On Human Range of Motion Features,” Seoul National University-University of Tokyo (SNU-UT) Joint Seminar, Mar. 2013 (pdf)

- W. Ong, L. Palafox, and T. Koseki, “Investigation of Feature Extraction for Unsupervised Learning in Human Activity Detection,” Bulletin of Networking, Computing, Systems, and Software, North America, 2, Jan. 2013 (presented at The Second International Workshop on Networking, Computing, Systems, and Software, Okinawa, Japan in Dec 2012) (pdf)