Enhancing the Accuracy and Robustness of Odometry through Deep Learning-based Sensor Fusion

This is Nazrul’s MSc project. We explored ways to improve the accuracy and robustness of odometry estimation through multi-modal sensor fusion. Estimating odometry is crucial for autonomous navigation of mobile robots as well as autonomous vehicles. Many methods rely on advanced AI, particularly deep neural networks, but they tend to favor cameras as their primary sensors. This reliance often demands human labeling or supervision. In our research, we introduced an automated approach that trains deep neural network in a self-supervised manner to predict an agent’s trajectory or position by analyzing Laser range data (LiDAR) and an Inertial Measurement Unit (IMU). In addition, we have incorporated LSTM on the input sequences to capture temporal features.

People

- Muhammad Nazrul Fitri Bin Hj Ismail

- Owais Ahmed Malik (Main Supervisor)

- Ong Wee Hong

Data/Codes

Publications

- N. Ismail, O. W. Hong and O. A. Malik, “Optimizing Odometry Accuracy through Temporal Information in Self-Supervised Deep Networks,” 2023 20th International Conference on Ubiquitous Robots (UR), Honolulu, HI, USA, 2023, pp. 490-495, doi: 10.1109/UR57808.2023.10202307. (pdf)

Media

The video below shows the performance of the hybrid visual odometry (VO) Nazrul has developed during the early stage of his MSc. In this work, we investigate the integration of both learning-based and classical approaches in monocular Visual Odometry (VO), employing a geometric pipeline inspired by DF-VO. The pipeline consists of three key steps: feature extraction, correspondence establishment, and pose estimation. These steps involve the use of two types of correspondences: 2D-2D and 3D-2D. We introduced two custom implementations that differ from DF-VO. The first one incorporate Neural depth prediction and ORB feature extraction, while the second one uses only a deep local feature extractor. Overall, our results based on Monodepth and ORB feature extraction has shown the method is competitive for 3D tracking on the KITTI dataset.

.

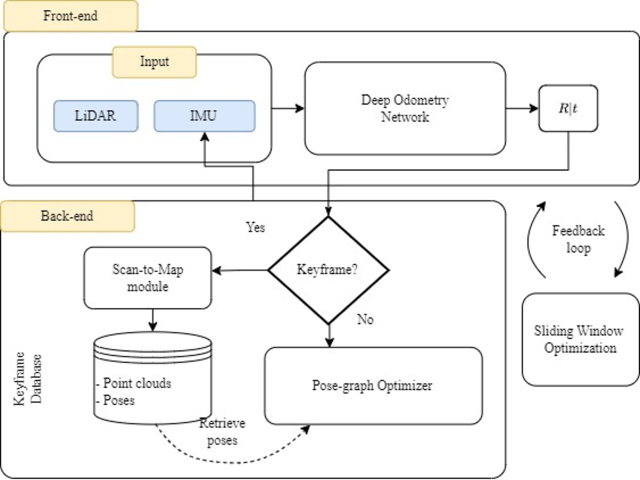

The video below shows the performance of the self-supervised end-to-end LiDAR odometry (LO) system developed during the later stage of Nazrul’s MSc. In this work, we proposed a self-supervised learning approach that leverages LiDAR and IMU data to enhance the accuracy and robustness of LiDAR odometry (LO) systems. The proposed approach aims to learn a mapping function that can accurately estimate the 6 degrees of freedom (6-DoF) motion by utilizing unlabeled LiDAR scan sequences and raw IMU measurements. This mapping function is then used to predict the pose as it moves through the environment.