One of the key abilities for effective human-robot interaction is the understanding of human intention by the robots. In this project, we focus on recognition of human facial and body expressions from multimodal input signals, i.e. visual and audio.

Mutli-modal Human Emotion Recognition

This is the final outcome of Bacha’s PhD work. In this work, we developed a audio-visual hybrid deep-learning network for human emotion recognition.

People

- Bacha Rehman

- Ong Wee Hong

- Trung Dung Ngo

Data/Codes

Publications

Media

Visual-Based Facial Expression Recognition

This is Bacha’s PhD work. Armed with improved performance in real-time face detection, we progress into visual-based facial expression recognition. We explored feature-based DNN and image-based CNN deep learning approaches. In our journey to discover a well performed facial expression recognition system, we experimented with different publicly available datasets and came up with a framework to implement the development pipeline of automatic facial expression recognition (AFER) system.

People

- Bacha Rehman

- Md Hafiq Anas

- Muhammad Amirul Akmal Haji Menjeni

- Ong Wee Hong

- Collaborators: Trung Dung Ngo

Data/Codes

- Bacha’s AFER development framework: https://github.com/ailabspace/bacha-afer-dev-framework

Publications

- Bacha Rehman, Wee Hong Ong, Trung Dung Ngo, “A Development Framework for Automated Facial Expression Recognition Systems”, the 4th International Conference on Computational Intelligence in Information Systems (CIIS 2020), 25-27 January 2021. https://doi.org/10.1007/978-3-030-68133-3_16 (pdf)

- Hafiq Anas, Bacha Rehman, Wee Hong Ong “Deep Convolutional Neural Network Based Facial Expression Recognition in the Wild”, arXiv (2020) arXiv:2010.01301 (pdf), for participation in FG-2020 Competition: Affective Behavior Analysis in-the-wild (ABAW) Competition, Expression Challenge category (Team Name: Robolab @ UBD)

Media

This is the result of the the real-time visual-based facial expression recognition of our DNN baseline model that has been trained with processed CK+ dataset. It has shown good real-time performance in recognizing expressions of eight (8) emotions.

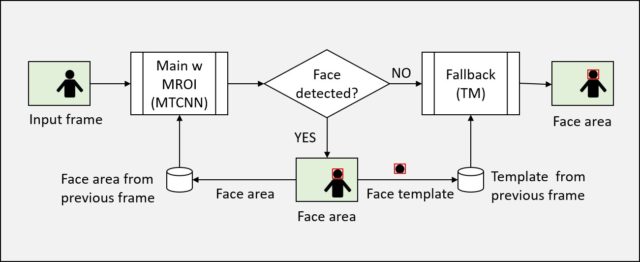

Face Detection

This is an initial in Bacha’s PhD work. Before working on the facial expression, we begin with improving existing face detection technique for realtime performance. In this work, we have proposed a combination of margin around region of interest (ROI) and template matching to improve detection rate and time performance.

We have collected real-life videos from the public domain to evaluate the performance of our proposed algorithms.

People

Data/Codes

- FDTV-10 Face Detection and Tracking Videos Dataset – 10 (videos with annotation)

- FDTV-20 Face Detection and Tracking Videos Dataset – 20 (videos with annotation)

Publications

- Rehman B., Hong O.W., Hong A.T.C. (2017) “Hybrid Model with Margin-Based Real-Time Face Detection and Tracking” In: Phon-Amnuaisuk S., Ang SP., Lee SY. (eds) Multi-disciplinary Trends in Artificial Intelligence. MIWAI 2017. Lecture Notes in Computer Science, vol 10607. Springer, Cham. https://doi.org/10.1007/978-3-319-69456-6_30 (pdf)

- Bacha Rehman, Ong Wee Hong, Abby Tan Chee Hong (2018) “Using Margin-based Region of Interest Technique with Multi-Task Convolutional Neural Network and Template Matching for Robust Face Detection and Tracking System” In: 2nd International Conference on Imaging, Signal Processing and Communication (ICISPC 2018). https://doi.org/10.1109/ICISPC44900.2018.9006678 (pdf)

- Rehman, B., Ong, W.H., Tan, A.C.H. et al., “Face detection and tracking using hybrid margin-based ROI techniques”, Vis Comput (2019). https://doi.org/10.1007/s00371-019-01649-y (pdf)

Media

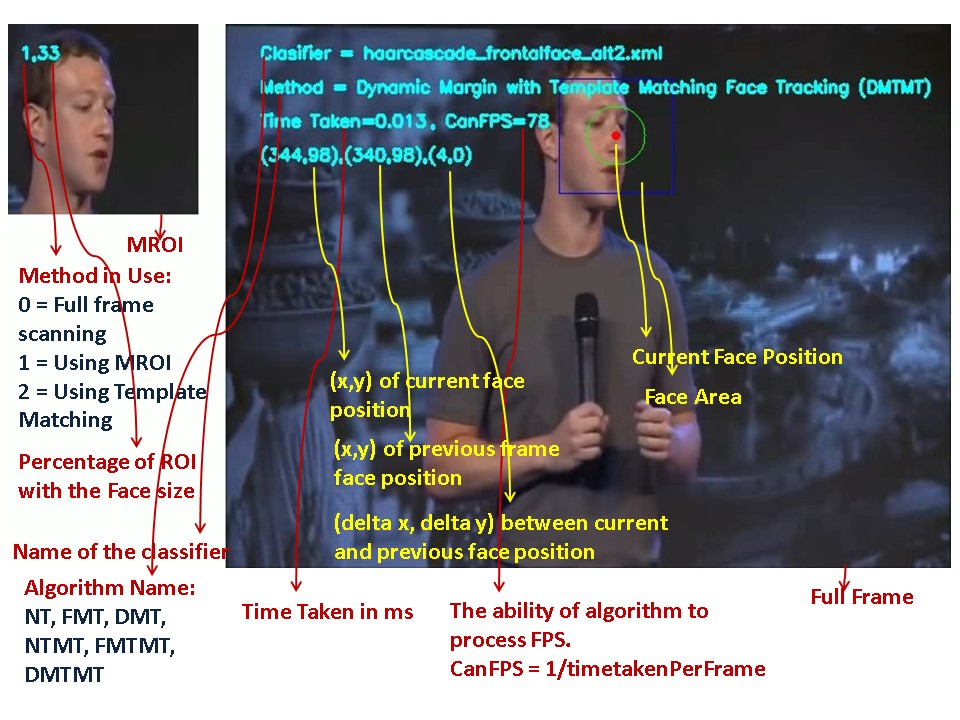

- We proposed a hybrid model with margin-based real-time face detection and tracking. We tested six different implementations of real-time face detection and tracking on 10 video source. The following videos demonstrate the effectiveness of combining template matching and margin-based ROI (region of interest) in improving face detection rate and speed. It compares six implementations on each source video. The right frame is the video source annotated with performance data, the left frame is the data being processed in each frame. More details are given in the published paper “Hybrid Model with Margin-Based Real-Time Face Detection and Tracking“.

Description of the annotations in the analysis video - We extend the work of hybrid margin-based region of interest face detection and tracking system with the state of art CNN based main routine. We have also expanded the dataset to 20 real-life videos. The system proved to be able to improve the performance of the state of art face detection system. More details are given in the published paper “Using Margin-based Region of Interest Technique with Multi-Task Convolutional Neural Network and Template Matching for Robust Face Detection and Tracking System”.